Generative AI

It would be an understatement to say that Generative AI (GenAI) is having its day in the sun. Most of today’s GenAI powered by Large Language Models (LLMs) is run in the centralized cloud, built with power-hungry processors. However, it will soon have to be distributed across different parts of the network and value chain, including devices such as smartphones, laptops and edge-cloud. The main drivers of this shift will be privacy, security, hyper-personalization, accuracy, and better power and cost efficiency.

AI model “training,” which occurs less often and requires extreme processing, will remain in the cloud. However, the other part, “inference,” where the trained model makes predictions based on the live data, will be distributed. Some model “fine-tuning” will also happen at the edge.

Challenges of today’s cloud-based GenAI

No question that AI will touch every part of human and even machine life. GenAI, which is a subset application, will also be very pervasive. That means the privacy and security of the data GenAI processes will be critically important, and unfortunately, there is no easy or guaranteed way to ensure that in the cloud.

Equally important is GenAI’s accuracy. For example, ChatGPT’s answers are often riddled with factual and demonstrable errors (Google “ChatGPT hallucinations” for details). There are many reasons for this behavior. One of them is that GenAI is derived intelligence. For example, it knows 2+2=4 because more people than not have said so. The GenAI models are trained on enormous generic datasets. So, when that training is applied to specific use cases, there is a high chance that some results will be wrong.

Why GenAI needs to be distributed

There are many reasons for distributing GenAI, including privacy, security, personalization, accuracy, power efficiency, cost, etc. Let’s look at each of them from both consumer and enterprise perspectives.

Privacy: As GenAI plays a more meaningful role in our lives, we will share even more confidential information with it. That might include personal, financial, health data, emotions and many details even you or your family and closest friends may not know. You do not want all that information to be sent and stored perpetually on a server you have no control over. But that’s precisely what happens when the GenAI is run entirely in the cloud.

One might ask, we already store so much personal data in the cloud now, why is GenAI any different? That’s true, but most of that data is segregated, and in many cases, access to it is regulated by law. For example, health records are protected by HIPPA regulations. But giving all the data to GenAI running in the cloud and letting it aggregate is a disaster waiting to happen. So, it is apparent that most privacy-sensitive GenAI use cases should run on devices.

Security: GenAI will have an even more meaningful impact on the enterprise market. Data security is a critical consideration when utilizing GenAI for enterprises. Even today, the concern for data security is making many companies opt for on-prem processing and storage. In such cases, GenAI has to run on the edge, specifically on devices and the enterprise edge cloud, so that data and intelligence stay within the secure walls of the enterprise.

Again, one might ask, since enterprises already use the cloud for their IT needs, why would GenAI be any different? Like the consumer case, the level of understanding of GenAI will be so deep that even a small leak anywhere will be detrimental to companies’ existence. In times when industrial espionage and ransomware attacks are prevalent, sending all the data and intelligence to a remote server for GenAI will be extremely risky. An eye-opening early example was the recent case of Samsung engineers leaking trade secrets when using ChatGPT for processing company confidential data.

Personalization: GenAI has the potential to automate and simplify many things in life for you. To achieve that, it has to learn your preferences and apply appropriate context to personalize the whole experience. Instead of hauling, processing, storing all that data and optimizing a large power-hungry generic model in the cloud, a local model running on the device would be super-efficient. That will also keep all those preferences private and secure. Additionally, the local model can utilize sensors and other information in the device to better understand the context and hyper-personalize the experience.

Accuracy and domain specificity: As mentioned, using generic models trained with generic data for specific tasks will result in errors. For example, a model trained on financial industry data can hardly be effective for medical or healthcare use cases. GenAI models must be trained for specific domains and further fine-tuned locally for enterprise applications to achieve the highest accuracy and effectiveness. These domain-specific models can also be much smaller with fewer parameters, making them ideal for running at the edge. So, it is evident that running models on devices or edge cloud is a basic need.

Since GenAI is derived intelligence, the models are vulnerable to hackers and adversaries trying to derail or bias their behavior. A model within the protected environments of enterprise is less susceptible to such acts. Although hacking large models with billions of parameters is extremely hard, with the high stakes involved, the chances are non-zero.

Cost and power efficiency: It is estimated that a simple exchange with GenAI costs 10x more than a keyword search. With the enormous interest in GenAI and the forecasted exponential growth, running all that workload on the cloud seems expensive and inefficient. It’s even more so when we know that many use cases will need local processing for the reasons discussed earlier. Additionally, AI processing in devices is much more power efficient.

Then the question becomes, “Is it possible to run these large GenAI models on edge devices like smartphones, laptops, and desktops?” The short answer is YES. There are already examples like Google Gecko and Stable Diffusion optimized for smartphones.

Meanwhile, If you want to read more articles like this and get an up-to-date analysis of the latest mobile and tech industry news, sign-up for our monthly newsletter at TantraAnalyst.com/Newsletter, or listen to our Tantra’s Mantra podcast.

In the fast-moving generative AI (Gen AI) market, two sets of recent announcements, although unrelated, portend why and how this nascent technology could evolve. The first set was Microsoft’s Office 365 Copilot and Adobe’s Firefly announcements, and the second was from Qualcomm, Intel, Google and Meta regarding running Gen AI models on edge devices. This evolution from cloud-based to Edge Gen AI is not only desirable but also needed, for many reasons, including privacy, security, hyper-personalization, accuracy, cost, energy efficiency, and more, as outlined in my earlier article.

While the commercialization of today’s cloud-based Gen AI is in full swing, there are efforts underway to optimize the models built for power-guzzling GPU server farms to run on power-sipping edge devices with efficient mobile GPUs, Neural and Tensor processors (NPU and TPU). The early results are very encouraging and exciting.

Gen AI Extending to the Edge

Office 365 Copilot is an excellent application of Gen AI for productivity use cases. It will make creating attractive PowerPoint presentations, analyzing and understanding massive Excel spreadsheets, and writing compelling Word documents a breeze, even for novices. Similarly, Adobe’s Firefly creates eye-catching images by simply typing what you need. As evident, both of these will run on Microsoft’s and Adobe’s clouds, respectively.

These tools are part of their incredibly popular suites with hundreds of millions of users. That means when these are commercially launched and customer adaption scales up, both companies will have to ramp up their cloud infrastructure significantly. Running Gen AI workload is extremely processor, memory, and power intensive—almost 10x more than regular cloud workloads. This will not only increase capex and opex for these companies but also significantly expand their carbon footprint.

One potent option to mitigate the challenge is to offload some of that compute to edge devices such as PCs, laptops, tablets, smartphones, etc. For example, run the compute-intensive “learning” algorithms in the cloud, and offload “inference” to edge devices when feasible. The other major benefits of running inference on edge are that it will address privacy, security, and specificity concerns and can offer hyper-personalization, as explained in my previous article.

This offloading or distribution could take many forms, ranging from sharing inference workload between the cloud and edge to fully running it on the device. Sharing workload could be complex as there is no standardized architecture exists today.

What is needed to run Gen AI on the edge?

Running inference on the edge is easier said than done. One positive thing going for this approach is that today’s edge devices, be it smartphones or laptops, are powerful and highly power efficient, offering a far better performance-per-watt metric. They also have strong AI capabilities with integrated GPUs, NPUs, or TPUs. There is also a strong roadmap for these processor blocks.

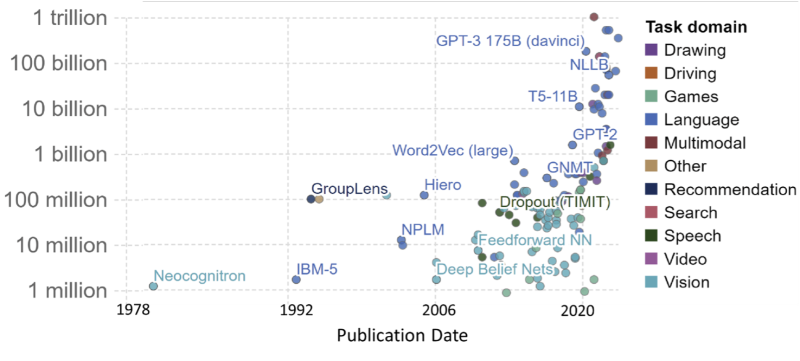

Gen AI models come in different types with varying capabilities, including what kind of input they utilize and what they generate. One key factor that decides the complexity of the model and the processing power needed to run it is the number of parameters it uses. As shown in the figure below, the model size ranges from a few million to more than a trillion.

Gen AI models have to be optimized to be run on edge devices. The early demos and claims suggest that devices such as smartphones could run models typically one to several billion parameters today. Laptops, which can utilize discrete GPUs, can go even further and run models with more than 10 billion parameters now. These capabilities will continue to evolve and expand as devices become more powerful. However, the challenge is to optimize these models without sacrificing accuracy or with minimal or acceptable error rates.

Optimizing Gen AI models for the edge

There are a few things that help in optimizing the Gen AI model for the edge. First, in many use cases, inference is run for specific applications and domains. For example, inference models specific to the medical domain need fewer parameters than generic models. That should make running these domain-specific models on edge devices much more manageable.

Several techniques are used to optimize trained cloud-based AI models for edge devices. The top ones are quantization and compression. Quantization involves reducing the standard 32-bit floating models to 16-bit, 8-bit, or 4-bit integer models. This substantially reduces the processing and memory needed with minimal loss in accuracy. For example, Qualcomm’s study has shown that these can improve performance-per-watt metric by 4-times, 16-times, and 64-times, respectively, with often less than 1% degradation in accuracy, depending on the model type.

Compression is especially useful in video, images, and graphics AI workloads where significant redundances between succussive frames exist. Those can be detected and not processed, which results in substantially reduced computing needs and improves efficiency. Many such techniques could be utilized for optimizing the Gen AI inference model for the edge.

There has already been considerable work and some early success for this approach. Meta’s latest Llama 2 (Large Language Model Meta AI ) Gen model, announced on July 18th, 2023, will be available for edge devices. It supports 7 billion to 70 billion parameters. Qualcomm announced that it will make Llama 2-based AI implementations available on flagship smartphones and PCs starting in 2024. The company had demonstrated ControlNet, an image-to-image model currently in the cloud, running on Samsung Galaxy S23 Ultra. This model has 1.5 billion parameters. In Feb 2023, it also demonstrated Stable Diffusion, a popular text-to-image model with 1 billion parameters running on a smartphone. Intel showed Stable Diffusion running on a laptop powered by its Meteor Lake platform at Computex 2023. Google, when announcing its next-generation Gen AI PaLM 2 models during Google I/O 2023, talked about a version called Gecko, which is designed primarily for edge devices. Suffice it to say that much research and development is happening in this space.

In closing

Although most of the Gen AI today is being run on the cloud, it will evolve into a distributed architecture, with some workloads moving to edge devices. Edge devices are ideal for running inference, and models must be optimized to suit the devices’ power and performance envelope. Currently, models with several billion parameters can be run on smartphones and more than 10 billion on laptops. Even higher capabilities are expected in the near future. There is already a significant amount of research on this front by companies such as Qualcomm, Intel, Google, and Meta, and there is more to come. It will be interesting to see how that progresses and when commercial applications running Gen AI on edge devices become mainstream.

If you want to read more articles like this and get an up-to-date analysis of the latest mobile and tech industry news, sign-up for our monthly newsletter at TantraAnalyst.com/Newsletter, or listen to our Tantra’s Mantra podcast.

Qualcomm vs. Arm - Trial Coverage

Background

Background

Qualcomm is one of the largest customers of Arm. However, ever since Qualcomm announced that it is acquiring Nuvia, the two companies have been at odds regarding their license contracts, culminating in today’s court trail. Today was the first day of this jury trial.

Check out my article series on the details of the fight between the two companies for more details.

Summary of Day 1

On the first day, both companies fired their first salvos through opening statements and testimonies and tried to set the narrative for the other party. Arm is trying to convince the jury that Qualcomm acquired Nuvia to save on license costs. It knows that it needs a new license to use Nuvia technology, but it doesn’t want to negotiate with Arm. They tried to negotiate with Qualcomm, but since they couldn’t agree on new terms, they had no other choice than to sue.

On the other hand, Qualcomm is trying to project that it acquired Nuvia because Arm was falling behind on performance, and its current ALA with Arm covers Nuvia technology. Additionally, Arm is trying to ask for higher licensing fees to cater to the demand of its parent company, SoftBank, to increase its revenue.

In my view, Arm’s opening statement was simple and told a complete story. It presented with a soft, almost victim-like demeanor. Qualcomm’s statement was more assertive and included many strong facts (e.g., Arm internal communications saying Qualcomm has “Bombproof” ALA).

Testimonials were quite informative and revealed many interesting facts, some rumored and others unknown (e.g. Arm considered a fully vertically integrated approach). Arm’s first testimony was bungled during cross-examination, the second was neutral, and the last one was powerful.

The day started balanced, swung toward Qualcomm, and ended with a neutral/slight advantage to Qualcomm.

Come back tomorrow for the second day’s update.

Highlights of Day 1 :

The first day started with pretrial discussions, opening statements from both companies and testimonies from Plaintiff Arm. Today’s witnesses were Arm Chief Commercial Officer Will Abbey, SVP Paul Williamson, and CEO Rene Haas, in that order. Key points of the Arm opening statement

-

Arm gave a deeply discounted licensing model to Nuvia, expecting to benefit when the company is acquired. The contract clearly states that the acquisition needs approval from Arm to assign its license to the new company.

-

It used a metaphor of piano keys to explain its licensing model – its tech tells what note keys should play, and it doesn’t matter how big the keys look, what music they create, etc.

-

Claimed that Qualcomm bought Nuvia to reduce its cost because the royalty rate for an Architecture License Agreement (ALA) is much lower than a Technology License Agreement (TLA). Showed a Qualcomm email claiming about $1.4B annually

-

Replied to one of Qualcomm’s claims that since Arm specifications are openly available on their website, hence not confidential, by saying license to use them.

Key points of the Qualcomm opening statement

-

Qualcomm reiterated it doesn’t need Nuvia ALA, as its own ALA with Arm covers Nuvia technology. Shared a lot of Arm’s internal emails/messages to show it also understood this, but sued Qualcomm to increase its revenue (Qualcomm has a “bombproof” license).

-

Used a metaphor of home to explain its view of licensing – the specification of doors, windows, etc, is similar to Arm license, but Qualcomm still needs to build the home, which is significant work and innovation. Developing the underlying RTL and creating processes is akin to this.

-

Said Qualcomm had to buy Nuvia as Arm was falling behind in performance

-

Arm’s deal made Qualcomm licensing 100-400% more expensive and included conditions like Nuvia engineers not working on new architecture design for 3 years, etc.

-

Showed a lot of Arm internal communication regarding how the change in Arm management /ownership pushed it to go hard against Qualcomm

Key Points from the testimonies:

Will Abbey

-

Long-time Arm executive, was responsible for Nuvia and Qualcomm relationship

-

Reiterated Arm’s stance, said this is the first and the only time Arm is suing a customer in its entire history, and the only instance when the license was terminated (Nuvia)

-

Said Qualcomm requested, and Arm assigned licenses when the former acquired CSR and Atheros. These were TLA, whereas Nuvia had ALA

-

Documents revealed Qualcomm ALA/TLA licensing rates:

-

ALA – 1.1% / $0.58

-

TLA – 5.3% / $2.2

-

-

Surprised that Nuvia executives were never present in any negotiations after the acquisition

-

During cross-examination, Qualcomm’s lawyer pointed out many inconsistencies between his statements during the sworn deposition and today’s statement (e.g., during the deposition, Will had said Nuvia had canceled its license but said Arm canceled it now). For that, he had to explain that he didn’t prepare well for the deposition, and preparation for the testimony “triggered” more memories. However, the deposition was almost one year closer to the actual events than today’s testimony.

Paul Williamson

-

Currently leads IoT business but was responsible for mobile business during the Nuvia acquisition

-

Reiterated many of Arm’s stances and things expressed by Will, such as CSR, Atheros assignment, and others

-

During cross-examination, many Arms internal emails/messages about what Qualcomm ALA covers were revealed. One key exchange was between him and Arm’s licensing person, where the latter opined Qualcomm ALA covered Nuvia technology

-

Discussion revealed Qualcomm ALA is valid till 2028, with an option to extend for five more years with a $1M/year fee

-

Arm offered a new deal to Qualcomm, called the “TC model,” which had total processor licensing (CPU, GPU, & others) for ~500% higher licensing price

-

Arm sent letters to its (and Qualcomm’s) customers and PC OEMs about Qualcomm working products for which Arm canceled licenses.

Rene Haas

This was probably the longest and most consequential testimony.

-

11 years at Arm, 35 years in the Semiconductor industry

-

He said, since he authorized the litigation, he felt he had to come to the testimony

-

Talked about impressive Arm growth, which really took off in 2013. Highlighted looking to add more value and increase revenue

-

Shared slide on how Arm is looking to outperform Nuvia with Black Hawk (Cortex X925)

-

Cross-examination was full of discussion around tons of Arm internal communications

-

Lots of messages and emails on how Qualcomm (and another player, most likely Apple’s) ALA rates are low and need to be unwound to increase Arm revenue

-

The final offer to Qualcomm was a one-time $123M assignment fee, the current ALA for mobile, and different rates for Compute, Auto, and others, which was rejected

-

Disagreed with previous CEO’s “finding middle ground with Qualcomm” approach

-

Discussions with SoftBank’s (which owns 90% of Arm) Masayoshi Son to keep the pressure up with Qualcomm, and overall increase in Arm revenue

-

Arm only has between 5 – 10 ALA customers

-

Some of Arm’s disclosures to the UK government during the Nvidia acquisition were not consistent with its positions elsewhere, and Rene had to explain the reasons (mostly timing)

-

Arm considered fully vertical model, making its own processor products. Even joked about how that would blow everybody else

-

Also suggested collaborated vertical integration with Samsung LSI for Samsung devices

Prakash Sangam is the founder and principal at Tantra Analyst, a leading boutique research and advisory firm. He is a recognized expert in 5G, Wi-Fi, AI, Cloud and IoT. To read articles like this and get an up-to-date analysis of the latest mobile and tech industry news, sign-up for our monthly newsletter at TantraAnalyst.com/Newsletter, or listen to our Tantra’s Mantra podcast.

Summary of Day 2

Check out the summary of Day 1 here.

The second day had very interesting morning and boring afternoon sessions. The most important discussion was whether processor design and RTL are a derivative of Arm’s technology. During Nuvia founder, CEO, and now Qualcomm SVP Gerard Williams’s testimony, Arm’s lawyer tried to corner him into agreeing to that. She even pointed to the Nuvia ALA text, which seems to suggest that Nuvia’s technology is a derivative of Arm’s technology (Arm ARM – Architecture Reference Manual). But Gerard vehemently opposed and fought against it throughout his lengthy testimony.

This assertion of derivative seems an overreach and should put a chill down the spine of every Arm customer, especially the ones that have ALA, which include NXP, Infineon, TI, ST Micro, Microchip, Broadcom, Nvidia, MediaTek, Qualcomm, Apple, and Marvell. No matter how much they innovate in processor design and architecture, it can all be deemed Arm’s derivative and, hence, its technology.

Today Arm finished its testimonies, and Qualcomm started with its witnesses. Tomorrow, I expect to see Qualcomm vehemently fighting against Arm’s assertion and most likely see Qualcomm CEO Christian Amon take the witness stand.

Come back tomorrow for the third day’s update.

Highlights of Day 2:

The second day saw testimonies from Gerard Wiliams, a couple of expert witnesses, Jonathan Weiser, SVP of Qualcomm, and a product manager from Arm.

-

Arm tried to link Nuvia’s processor design work to ALA in many ways. Their lawyer grilled Gerard by showing many different parts of the contract, his presentations, internal emails, messages, and other things to prove this point. Gerard did a great job defending against all of them. However, an obscure explanation for the derivates of Arm’s technology (known as ARM – Architecture Reference Manual) in Nuvia’s ALA seems to indicate that Nuvia’s architecture (design and RTL code) is part of it. Gerard disagreed with it.

-

I am not a lawyer, so I don’t know how to interpret this seeming mention, and how strong will it stand. More importantly, how much weight Jury will give it., vis-à-vis all the testimony they have heard yesterday.

-

There were three expert witnesses today, two from Arm and one from Qualcomm. The first was Dr. Robert Colwell. He tried to say that processor designs are dependent on Arm ARM, but buckled during cross-examination because of inconsistencies in responses. Second was Dr. Shuo-Wei (Mike) Chen, who analyzed the RTL codes between Nuvia and Qualcomm cores and saw similarities, which was expected. Third was Dr. Murali Annavaram, who opined on Qualcomm’s claim about Arm using some other Nuvia’s IP without permission.

Key Points from the testimonies:

Gerard Williams

This was the longest and most consequential testimony.

-

Nuvia Founder and CEO, now Qualcomm SVP, previously worked for Apple and Arm

-

He looked a little bit unsure in the beginning but became confident as the questioning progressed

-

Rejected Arm’s claim that Nuvia needed approval from Arm for acquisition

-

Said he made sure during ALA negotiations that Nuvia had ownership of all the technology it developed, independent of Arm’s technology

-

Asserted that processor design and RTL code are independent of Arm’ ARM

-

Processors become Arm-compatible after they are certified as such, not when designing

-

When Nuvia designs were transferred to Qualcomm, they were still in design, so they were not yet Arm-compatible

-

Reiterated that Arm ARM is freely available on the internet for anybody to download, hence not confidential

-

Only the latest versions of the ARM are confidential and will eventually released on the internet

-

In compliance with ALA, Nuvia destroyed confidential information (latest, non-pubic ARM documents) during acquisition

-

Claimed ARM is not a recipe for creating a processor. Even if somebody fully studied the ARM they can’t design a processor

-

Explained how designing processors needs a lot of engineering talent and experience. That’s why he built a team with about 300 engineers (150 CPU experts + 150 System/SoC experts)

-

Nuvia first tried to use Arm’s TLA but ultimately decided to build its own cores using ALA, hence ended with both licenses

-

Nuvia ALA had a clause that made it null after any acquisition

-

Disagreed that Nuvia got a deep-discount deal, paid $22M

Dr. Robert Colwell – Arm’s expert witness

-

Ex-Intel, Ex-DARPA, previously consulted with Qualcomm

-

Opined that processor design and RTL are dependent on the architecture

-

Asserted that there was commonality in Arm RTL and Nuvia RTL

-

Buckled under cross-examination because of inconsistencies between the deposition statements and today’s testimony ( ISA is useless, stats used, etc.)

Dr. Shuo-Wei (Mike) Chen – Arm expert witness

-

Professor at USC, MS & PhD at UC Berkeley

-

Studied the commonality between Nuvia and Qualcomm cores

-

Code commonality: 57% in Compute, 47 % in Mobile, 37% in Auto, and 20% in new unnamed platforms

-

This is no surprise, as Qualcomm has readily agreed that it built its cores on Nuvia design

-

Since Compute was the first commercial solution, it had the highest commonality and went down as it was introduced in to other domains

Jonathn Weiser

-

30 years at Qualcomm, was involved in negotiating ALA and TLA

-

Qualcomm ALA and TLA signed in 2013, after 2-3 years of negotiations

-

Earlier TLA was signed in 1995, and TLA in 2003

-

ALA was amended in 2017

-

Qualcomm informed Arm in Jan 2021 that Nuvia engineers have joined Qualcomm and will be covered by the company’s ALAPrakash Sangam is the founder and principal at Tantra Analyst, a leading boutique research and advisory firm. He is a recognized expert in 5G, Wi-Fi, AI, Cloud and IoT. To read articles like this and get an up-to-date analysis of the latest mobile and tech industry news, sign-up for our monthly newsletter at TantraAnalyst.com/Newsletter, or listen to our Tantra’s Mantra podcast.

Summary of Day 3

Check out the summary of Day 1 here and Day 2 here.

Significant progress was made on the third day. At the end of the day, the case has boiled down to two questions to be decided by the jury: 1) Whether Qualcomm and/or Nuvia breached a specific section of the Nuvia ALA (15.1a), which requires Arm to prove that Qualcomm was required to destroy all products using the Nuvia technology that Qualcomm acquired; 2) Whether Qualcomm’s products are licensed under the Qualcomm ALA. The first was the decision by Arm lawyers, and the second is the confirmation asked by Qualcomm.

The day saw intense cross-examination of Qualcomm expert witness Dr. Murali Annvaram and the testimony of Qualcomm CEO Cristiano Amon. Arm’s lawyer tried to make Dr. Annavaram say that cores are Arm technology, which he didn’t. Cristiano explained that Arm’s lagging behind Apple in performance was the main reason for buying Nuvia, and he would be happy to get TLA from Arm if it provided high-performance cores even now.

All the testimonies are now completed. Tomorrow, both parties will make their closing statements, and jury deliberations will begin. If the Jury decides quickly, we might have a decision by tomorrow evening.

Come back here tomorrow for the fourth day’s update.

Highlights of Day 3:

-

Cross-examination of Annavarm was pretty intense. There is a lot of back and forth on defining what Arm Technology is. Although there is a definition of the term in the ALA, the discussion focused on the explanation of “Confidential Information,” which seems to suggest that “Architecture Compliant Core” is an Arm derivative. Dr. Annavarm didn’t agree to that at all. This is the same thing that was the bone of contention during Gerard Williams’s testimony on Day 2

-

The “Arm Technology” term has been used many, many times during the last three days. It does have a specific definition in the contract. That doesn’t include any of the work, including design, RTL, and lots of other stuff done by companies like Nuvia to design processors. This is in contrast to how it is mentioned in the definition of “Confidential Information” mentioned above. It will be interesting to see how the jury will view this.

-

Qualcomm’s counsel turned Arm’s Piano analogy on its head. Arm compared its ISA to a Piano Keyboard design during the opening statement and used it throughout the trial. It claimed that no matter how big or small the Piano is, the keyboard design remains the same and is covered by its license. Qualcomm’s counsel extended that analogy to show how ridiculous it would be to say that because you designed the keyboard, you own all the pianos in the world. Suggesting that is what Arm is trying to do.

-

Key Points from the testimonies:

Video depositions from Arm engineers and managers

-

Internal messages suggesting Arm has no basis to sue Qualcomm after the Nuvia acquisition but only after the company ships actual products

-

Another opinion is that Arm ARM doesn’t help in designing processors or developing any features

-

For the compute platform (Hamoa), Qualcomm started the project with Nuvia implementer ID and changed it to that of Qualcomm

-

Emails suggesting Qualcomm was complaining Arm substantially increased its royalty rates after the company disbanded it’s custom core effort

-

Messages suggesting Qualcomm complained that Apple’s cores have 20-25% better performance than Arm cores

Dr. Murali Annavaram – Qualcomm expert witness

-

Professor at USC, previously worked for Intel and Nokia, lots of awards/recognitions

-

Reviewed source code of Qualcomm and Nuvia RTL

-

Explained what microarchitecture is and how designing cores involves switching 15 billion transistors on and off etc, in simple terms to the jury

-

1000s of microarchitecture blocks in a chip – computing, storage, memory, scheduler etc.

-

Opined strongly that Nuvia/ Qualcomm CPU cores do not use or are derived from Arm technology

-

Claimed that the commonality between Arm RTL and Qualcomm RTL is less than 1%

-

RTL codes are regularly regenerated, so most of the codes in cores will be Qualcomm’s

-

The commonality between Nuvia/Arm RTL codes highlighted by Arm was mostly in comments, not in code

-

Long winding back and forth between Arm counsel and Dr. Annavaram on whether Arm complaint cores are derivative of Arm technology. The confusion stems from how they are defined differently in two parts of the contract.

-

Annavaram said, he is not a lawyer, and he can’t opine on how it is defined in the contract. But based on his technical knowledge, cores are not a derivative

Cristiano Amon

-

Explained Qualcomm and Arm relationship in the last few years – started with ALA for building the first smartphone SOC, when Arm didn’t have its core

-

Arm came to Qualcomm with TLA deal and made the company stop its custom core effort

-

Arm fell behind Apple in performance and even behind Intel and AMD in PCs

-

Had a few executive-level meetings with Arm to complain about it

-

Bought Nuvia because of Gerard Williams, and his reputation at Apple building Apple M series chips

-

Worked with Arm’s then-CEO Simon Segars to resolve the Nuvia licensing issue but in vain

-

Got a call from TM Roh, head of Samsung Mobile, asking for assurance of supply of Snapdragon chips. He was worried because the chairman of Arm board and SoftBank CEO Masayoshi Son had told him that Qualcomm’s Arm license will expire in 2025 and may not be extended (actually, the contract runs till 2028 with an option to extend for five more years)

-

Agreed to Arm’s “Piano” analogy but gives qualifications (e.g., accordion also has same keys)

-

Went to Nuvia first to create custom CPU but didn’t workout

-

Strongly said to many questions that Qualcomm is committed to honoring all contracts, not just license ones

-

Qualcomm CFO’s initial Nuvia price estimate was $500B

-

Reiterated that he didn’t believe Qualcomm needed permission from Arm to buy Nuvia but asked for approval anyway to maintain good relationship

-

Said it is very unreasonable for Arm to ask for the destruction of Nuvia’s work, which was not dependent on Arm’s license

-

Was asked about his compensation, for which he replied that he didn’t remember and said it’s public information

Summary of Day 4

Check out the summaries of previous days: Day 1, Day 2, and Day 3.

Today was the last day of the hearing. Both parties gave their closing statements, which were primarily rehashes of their arguments so far. The presiding judge gave final jury instructions, and the jury started deliberations around noon. They will have to decide on three questions, and their verdict on each of the questions will have to be unanimous. They deliberated until the end of the day (4:30 p.m.) but didn’t converge. They will return tomorrow at 9 am. The judge might also discuss remedies tomorrow.

I am hopeful that the Jury will come up with a verdict tomorrow, or else they will have to come back on Jan 3rd next year because of the Christmas holidays. That will be a nightmare scenario as they might have forgotten all the testimonies and other details.

Come back here tomorrow for the fifth and possibly the final day’s update.

Highlights of Day 4:

-

There are three questions (really two, as 1 & 2 are similar) the jury has to answer, as I had discussed in the Day 2 blog:

-

Whether Nuvia has breached section 15.1(a) of Nuvia ALA?

-

Whether Qualcomm has breached section 15.1(a) of Nuvia ALA?

-

Are Qualcomm products using Nuvia technology covered under Qualcomm ALA?

-

Section 15.1(a) refers to destroying Arm’s confidential information after ALA cancellation. The second question is interesting, as Qualcomm was never a party to the Nuvia ALA. However, Arm claims that since Qualcomm enjoyed the benefits of the technology developed under the Nuvia ALA, it has to be associated with that contract. The third question is Qualcomm’s counterclaim, which is to protect it from any future litigation related to Nuvia technology.

-

Arm, in its closing statement, reiterated the same arguments from the last three days and tried to address some of the issues raised by Qualcomm

-

Nuvia/Qualcomm needed Arm consent for acquisition

-

Nuvia breached the confidentiality requirements of ALA by sharing it with Qualcomm

-

Qualcomm bought Nuvia because of cost savings, not performance issues

-

Arm Technology includes Architecture Compliant Core (check out Day 2 blog for details)

-

Many things Qualcomm raised are irrelevant (e.g., SoftBank/ Masayoshi Son’s influence, letters to Qualcomm customers, CEO compensation, etc.)

-

Arm’s presentation was more direct – this is a contract case, and here are our arguments on why we are right.

-

Qualcomm’s closing statement also included a rehash of arguments presented during the course of the trial, as well as a few new things:

-

Where is the harm? Arm never claimed any harm or damage

-

It sent letters to over 40 OEMs suggesting Qualcomm had breached the Arm contract

-

Processor design, RTL code, and other Nuvia Technology were not a derivative of Arm technology

-

There was no assignment of #ALA to Qualcomm – Qualcomm ALA covers all its products (Contract states “work done by itself & others”)

-

Arm Architecture Reference Manual is a publicly available document, not confidential

-

The primary reason for Arm to bring this case is to increase revenue from Qualcomm, done at the behest of the Chair of the Board

-

Unlike Arm, Qualcomm’s approach was a little bit emotional, mentioning harm to the chip industry and ecosystem, etc.,

Summary of Day 5

Check out the summaries of previous days: Day 1, Day 2, Day 3, Day 4.

The final day of the trial was a slow-moving soap opera, most of the day with a nail-biting finish that brought victory to Qualcomm.

The day started slow, with the Jury resuming deliberations and remedy discussion scheduling. Around 11 am, the judge summoned the parties back into the room and informed them that the Jury was deadlocked on Question 1 (Whether Nuvia breached Nuvia ALA) and had decided on Question 2 (Whether Qualcomm breached Nuvia ALA) and Question 3 (Are Qualcomm products using Nuvia technology covered under Qualcomm ALA?)

After giving deadlock-related jury instructions, the jury was sent back with additional time. At around 1 pm, they came back without any change. At that point, the judge decided to accept the two decisions and announced the verdict:

Question 1 – No decision; Question 2 – Qualcomm won; Question 3 – Qualcomm won

Before the decision, Arm’s lawyer tried to convince the judge not to accept the two verdicts, but none of the arguments persuaded her. Additionally, the judge put a high bar for retrial. She will order mediation and is not keen to see them back in court quickly. That makes this case a sure win for Qualcomm.

With that, Qualcomm kept its impressive streak of winning major court trials. Recently, it won against FTC, won one, and settled another case against Apple, and now against Arm (actually SoftBank, which owns 90% of Arm).

Come back for… well, today was the final day. Happy Holidays! Check out our other content on the site!

Highlights of Day 5:

-

When the court opened, the judge asked both parties whether they wanted to discuss remedies today before the jury verdict or postpone it to sometime in January. Bot lawyers choose the latter

-

About two hours into the day, the judge (through the clerk) asked both parties whether she should check with the jury on progress. But the Arm lawyer declined

-

Around 11 am, the judge informed everyone that the jury was deadlocked on Question 1 and had decisions on Question 2 and Question 3

-

The jury was summoned back to the courtroom, and the Judge gave them jury instructions related to deadlock and explained what happens if they don’t break the deadlock (retrial with a jury from the same jury pool)

-

The jury was given another hour or so to see whether they could converge on Question 1

-

It was pretty clear to me at that stage that Qualcomm had won on Question 2 and Question 3 because if they had not, Question 1 would have been an easy decision

-

Around 1 pm, the court reconvened. The judge said nothing had changed, and she was ready to accept the decisions for Question 2 and Question 3.

-

Arm’s lawyer objected to that, saying Q1 and Q2 are linked, so she should not accept the partial verdict, quoting a possible precedence (Palo Alto vs. Juniper case)

-

The judge asked for details. Arm team scrambled to get some specifics, but she was unconvinced about the relevance of the cited precedence

-

Arm’s lawyer suggested bringing back the jury on Monday, which the Judge summarily rejected, saying they had already spent more than 10 hours on deliberations and had decided on two questions unanimously. She added that Arm will have a chance for a retrial

-

Finally, the jury was brought to the room, and the judge asked each of the jurors whether they could decide on Question 1 if more time was given. All of them said no.

-

At that point, she received the verdict from the jury, and the clerk read them out

-

After the jury left, the judge came back to the room and discussed the next steps

-

She thought the case should have been settled instead of coming to trial

-

She will not entertain a quick retrial to come to her court. Instead, she will order mediation for the companies. She even offered to recommend good mediators who have worked on complex cases. But all of that will be next year

-

With that, the judge wished happy holidays for everyone and dismissed the court

Qualcomm vs. Arm

When the legal struggle between long-term allies Qualcomm and Arm became public, everybody thought it was an innocuous case that would quickly settle. Although I believe that is still the case, the recent uptick in hostilities points to a more convoluted battle.

It all started when Qualcomm announced and finally acquired processor design startup Nuvia in 2021. Nuvia was developing a new CPU architecture that it claims is superior to anything in the market. Qualcomm has publicly stated that it will use Nuvia designs and the team for its entire portfolio, including smartphones, tablets, PCs, Automotive, IoT and others.

Nuvia’s designs run Arm’s instruction set. It had an Instruction Set Architecture (ISA) license from Arm, with certain licensing fees. This license is also known as Architecture License Agreement (ALA) in legal documents. Since Qualcomm also has an ALA with Arm, with a different licensing fee structure, there is a difference of opinion between Qualcomm and Arm on which contract should apply to Nuvia’s current designs and its evolutions.

If you want to know more about the types of licensing Arm offers and other details, check out this article.

According to the court documents, the discussions between Qualcomm and Arm broke down, and unexpectedly, Arm unilaterally canceled Nuvia’s ALA and asked it to destroy all its designs. It even demanded Qualcomm not to use Nuvia engineers for any CPU designs for three years. Arm officially filed the case against Qualcomm on August 31, 2022.

Qualcomm filed its reply on September 30, 2022, summarily rejecting Arm’s claims. Following that, on October 26, 2022, Qualcomm filed an amendment alleging that Arm misrepresented Qualcomm’s license agreement in front of Qualcomm’s customers. Further, it asked the court to enjoin Arm from such actions.

Why is Arm really suing Qualcomm? Is it about the PC market?

Easy question first. No, it’s not just about the PC market. Qualcomm’s intention to use Nuvia designs across its portfolio is an issue for Arm.

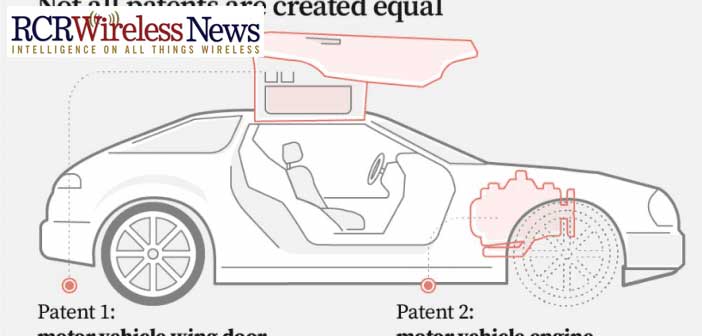

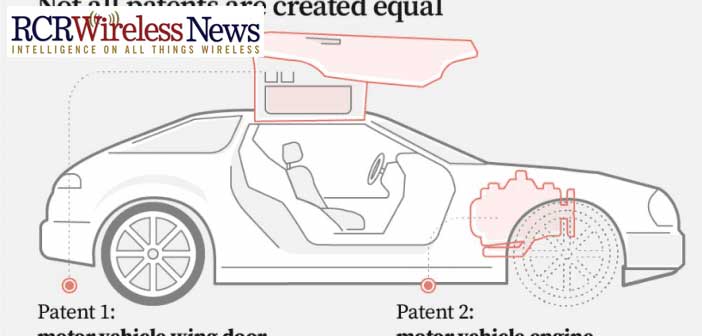

Qualcomm has both ALA and Technology License Agreement (TLA) with Arm. The former is required if you are using only Arm’s instruction set, and the latter if you use cores designed by Arm. TLA fees are magnitudes higher than ALA. Qualcomm currently uses Arm cores and TLA licensing. According to Strategy Analytics analyst Sravan Kundojjala, it pays an estimated 20 – 30 cents per chip to Arm.

Since Qualcomm negotiated the contract years ago, its ALA rate is probably very low. So, if Qualcomm adopts Nuvia designs for its entire portfolio, it will only pay this lower ALA fee to Arm. For Arm, that puts all the revenue coming from Qualcomm at risk. That is problematic for Arm, especially when it is getting ready for its IPO.

With the Nuvia acquisition, Arm saw an opportunity to renegotiate Qualcomm’s licensing contract. Moreover, Nuvia’s ALA rate must be much higher than Qualcomm’s. That is because of two reasons. First, Nuvia was a startup with little negotiation leverage. And second, it was designing higher-priced, low-volume chips, whereas Qualcomm primarily sells lower-priced, high-volume chips. So, it is in Arm’s favor to insist Qualcomm pay Nuvia’s rate. But Qualcomm disagrees, as it thinks its ALA covers Nuvia designs.

Core questions of the dispute

Notwithstanding many claims and counterclaims, this is purely a contract dispute and boils down to these two core questions:

-

Does Nuvia’s ALA require mandatory consent from Arm to transfer its designs to a third party, Qualcomm?

-

Does Qualcomm’s ALA cover the designs they acquire from a third party, in this case, Nuvia?

Clearly, there is a disagreement between the parties regarding these questions. Since the contracts are confidential, we can only guess and analyze them based on the court filings. I am sure many things are happening behind the scenes as well.

Let’s start with the first one. Since Nuvia was a startup, its acquisition by a third party was given. I am assuming there is some language about this in the ALA. But, interestingly, Arm, in its complaint, hasn’t cited any specific clause of the contract supporting this. Arm only claims Nuvia requires consent. The argument that Arm didn’t want to disclose that in a public document doesn’t also hold. They could have cited the clause with the details redacted, just like other clauses mentioned there.

In the amended Qualcomm filing, there is some language about needing consent to “assign” the license to the new owner. But according to Qualcomm, it doesn’t need this license “assignment” as it has its own ALA.

If there is no specific clause in the Nuvia ALA regarding the acquisition by an existing Arm licensee. Then shame on the Arm contract team.

There is not much clarity on the second question. Most of Arm’s claims in the case are related to Nuvia ALA. Qualcomm claims it has wide-ranging licensing contracts with Arm that cover using Nuvia’s designs. But I am sure this question about Qualcomm ALA will come up as the case progresses.

Closing thoughts

Qualcomm and Arm have been great partners for a long time. Together they have created a vast global ecosystem. However, recent developments point to a significant rift between the two. Especially, Qualcomm’s allegation about Arm threatening to cancel its license in front of Qualcomm’s customers is troubling. There was some alleged talk of Arm changing its business model and other extremities, which might also unnerve other licensees. We are yet to hear Arm’s reply to these allegations.

I think the widely anticipated out-of-court settlement is still the logical solution. When the case enters the discovery phase, both parties will have access to each other’s evidence and understand the relative strengths. Usually, that triggers a settlement.

In my opinion, both parties wouldn’t be interested in a lengthy court battle. Arm is looking for IPO and doesn’t need this threat hanging over its head, which will spook its investors. Qualcomm is planning a major push into the PC market in collaboration with Microsoft, and supposedly planning its future SoC roadmap on Nuvia designs. So, it would also like to end the uncertainty at the earliest. Notwithstanding this case, Qualcomm seems to be going ahead with its plans.

We are still in the very early stage of this case. Arm lawyers have extensive licensing experience, and Qualcomm’s lawyers are battle-hardened from their recent lawsuits against Apple and FTC. I will be closely watching the developments and writing about them. So, be on the lookout.

Meanwhile, If you want to read more articles like this and get an up-to-date analysis of the latest mobile and tech industry news, sign-up for our monthly newsletter at TantraAnalyst.com/Newsletter, or listen to our Tantra’s Mantra podcast.

Prakash Sangam is the founder and principal at Tantra Analyst, a leading boutique research and advisory firm. He is a recognized expert in 5G, Wi-Fi, AI, Cloud and IoT. To read articles like this and get an up-to-date analysis of the latest mobile and tech industry news, sign-up for our monthly newsletter at TantraAnalyst.com/Newsletter, or listen to our Tantra’s Mantra podcast.

In the ongoing Qualcomm vs. Arm saga, the US District Court of Delaware recently released the case schedule, setting the stage for the battle. It reveals two critical dates. The first is for the discovery process, during which early settlements often occur. Second is the trial date, which lays out how long the litigants have to wait for a legal resolution. Usually, litigants, which can wait longer, have the upper hand, especially if the opposing party can’t handle the uncertainty and is pressed for time.

Unless either party realizes that their case is weak during discovery, we are on for a long battle. In such a case, Qualcomm has the luxury of time, which might push Arm for a quicker and unfavorable settlement.

Note: If you would like to know the chronology of this battle, what the issues are, and what is at stake, check out my earlier article, “Qualcomm, Arm legal spat regarding Nuvia becomes more bitter“

It’s a game of chicken

Typically, litigation between large companies, such as this, is a “game of chicken.” It’s a matter of who gives up first. There are a couple of stages where this “giving up” can happen.

The first is during the discovery phase, when both parties closely examine the other party’s evidence and other details. A good legal team can assess their case’s merits and, if weak, settle quickly.

If the discovery is inconclusive, the next thing both companies try to avoid is a long-drawn jury trial, which brings all the dirty laundry into the open. So, most such cases get settled before the jury trial begins. Even if the case goes to trial and is decided in the lower courts, litigants with the luxury of time can keep appealing to higher courts and delay the final verdict. So, it all boils down to who has the time advantage and can stay longer without succumbing to market, business, and other pressures.

Time in on Qualcomm’s side

In the Qualcomm vs. Arm case, discovery is set to start on January 13th, 2023, and the trial on September 23rd, 2024. The actual trial is almost two years away. In such a case, I think Qualcomm has the time-advantage, for a few reasons:

-

Qualcomm can keep using Nuvia IP without any issues till the matter is resolved

-

Based on precedent, it is highly unlikely that Arm will get an injunction against Qualcomm. And probably realizing this, Arm has not yet even asked for a preliminary one. This means Qualcomm can keep making and selling chips based on the disputed IP while the case drags on.

-

No matter who wins, the other party will most likely appeal, which might extend the case to 2025 or even 2026.

-

Qualcomm can indemnify and mitigate the risks of OEMs using the disputed IP

-

Qualcomm is initially targeting the laptop/compute market with Nuvia IP through its newly announced Oryon CPU core. Arm might be thinking because of the litigation, OEMs will be discouraged from developing products based on Oryon. However, Qualcomm can easily address that by indemnifying any investment risks OEMs face.

-

Since OEMs will initially utilize Oryon for fewer models, the overall shipments will be relatively small. Hence Indemnification is quite feasible for Qualcomm.

-

This will be an easy decision for OEMs – their limited initial investments for experimenting with the new platform are protected, while the possible future upside is enormous.

-

Qualcomm’s litigation prowess and recent successes against giants like Apple and FTC will give a lot of confidence to OEMs.

-

SoftBank would like to IPO Arm as soon as it can. However, the uncertainty of this case will significantly depress its valuation.

-

This case puts Arm’s future revenue from Qualcomm at risk. If Arm loses, its revenue from Qualcomm will be reduced to a paltry architecture license fee of 2 to 3 cents per device (estimated), magnitudes lower than the current rate.

-

If Arm wins, there is a considerable upside. That might attract some risky investors, but they will demand a discount from SoftBank/Arm for that risk.

-

Qualcomm’s strong track record in litigation will also affect investor sentiment.

-

SoftBank may not want to wait till the case is over for IPO

-

If the case drags on till 2026, that is a long time in the tech industry. A lot of things can change. For example, competing architectures like RISC-V might become more prominent. Qualcomm recently said it has already shipped 650 million RISC-V-based microcontrollers. Many major companies, including Google, Intel, and AMD, are members of the RISC-V group. This might reduce Arm’s valuation if SoftBank waits longer for IPO.

-

SoftBank’s other bets are also not doing so great. The upcoming slowdown in the tech industry might push it to dispose of Arm sooner than later.

Arguably, the prolonged litigation will also have some adverse impact on Qualcomm. As indicated many times by its CEO Cristiano Amon and other executives, Qualcomm plans to build on Nuvia IP and use it across its portfolio, from smartphones to automotive and IoT. The uncertainty might affect its long-term strategy, roadmap planning, and R&D investments. This might incentivize an early settlement but is not significant enough to compel them to do it.

Closing thoughts

The Qualcomm vs. Arm case has become a game of chicken. There might be a settlement based on discovery. If not, SoftBank/Arm can’t afford prolonged litigation, but Qualcomm can. This might push Arm to settle sooner and at terms more favorable to Qualcomm.

There are some other considerations as well, such as the worst- and best-case scenarios, how the recent appointment of Qualcomm veteran and previous CEO Paul Jacobs to the Arm board affect the dynamics of the case, and so on. Those will be the subject of my future article. So, be on the lookout.

Meanwhile, If you want to read more articles like this and get an up-to-date analysis of the latest mobile and tech industry news, sign-up for our monthly newsletter at TantraAnalyst.com/Newsletter, or listen to our Tantra’s Mantra podcast.

Softbank is reportedly planning Arm IPO while locked in a high-stakes legal battle with Qualcomm. Although the case is becoming a game of chicken, because of its enormous impact on many stakeholders, including the litigants, the huge Arm ecosystem and especially the two major markets – personal and cloud computing – it is worthwhile to understand the best and worst case scenarios.

Before analyzing those, it is also important to address lingering questions and confusion about the case.

Clearing the confusion and misconceptions

Generally, anything related to licensing is shrouded in secrecy, creating confusion. Fortunately, the court filings have clarified some questions and misconceptions people have.

Is the case only about Nuvia Intellectual Property (IP), or does it affect the other licenses Qualcomm has with Arm?

It’s only about Nuvia’s Architecture License Agreement (ALA) with Arm and products based on Nuvia IP. None of Qualcomm’s other designs and products, which are covered by its existing license with Arm, are affected.

The case will be in court for more than 2-3 years. Can Qualcomm develop and commercialize products based on Nuvia IP during that time?

Yes. So far, Arm has not asked for injunction relief against Qualcomm. Even if asked, it is highly unlikely that it will be granted. Injunctions are hard to get in the US, and Arm must explain why it waited so long. So, Qualcomm can use Nuvia’s IP while the case drags on in the courts. Qualcomm has already announced Oryon CPU based on Nuvia IP and is working with several PC OEMs.

Can Arm unilaterally cancel its licenses with Qualcomm?

No. Qualcomm claimed to have a legally binding licensing contract for several years in the court filings. So, unless Qualcomm violates any conditions of the agreement, Arm can’t unilaterally cancel the licenses.

As alleged in one of the court filings, can Arm change its practice of licensing to chipset vendors and instead license to device OEMs?

Arm can’t change licenses of existing licensees such as Qualcomm, Apple and many others, as they have legally binding agreements. But for any new licenses, Arm is free to engage with anybody it wishes, including device OEMs.

With these questions clarified, let’s look at the various scenarios.

Best and worst cases for Qualcomm

The best case for Qualcomm would be winning the case. The win would disrupt the personal and cloud computing market and revolutionize smartphone, auto and other markets. That means it will keep paying Arm at its current ALA rate for all the products that incorporate Nuvia IP.

The absolute worst case would be losing the case and all the following appeals (more on this later). But a realistic worst-case scenario would be settling with terms favorable to Arm. That means its ALA rate will go up. It is hard to predict by how much. The upper bound will probably be the rate in Nuvia’s ALA.

Best and worst cases for Arm

Surprisingly, the best case for Arm is not winning the case and the court ordering Qualcomm to destroy its designs and products (more on that later). Instead, it is the case heavily tilting to its side, making Qualcomm settle on terms favorable to Arm, even before its IPO. Those terms will depend on Qualcomm’s success with Nuvia IP, Arm’s IPO valuation and Soft Bank’s patience in extending the IPO timeline. It would be reasonable to agree that the upper bound would be the Nuvia ALA rate.

A settlement would also help calm the nerves of its other licensees.

The worst-case scenario for Arm is the status quo, where Qualcomm pays lower ALA rates instead of 20-30 cents per device Technology License Agreement (TLA) rates for all devices based on Nuvia IP. In my view, this is one of the reasons for Arm to go the litigation route – there is a significant potential upside if it can force Qualcomm to pay more. The downside is tied to litigation costs and a long, protracted legal fight can cost hundreds of millions.

There will be a substantial downside in the medium and long term, especially considering its IPO plans. A significant part of its revenue from Qualcomm is at risk if the latter moves all its design to Nuvia IP, and starts paying meager ALA rates. Additionally, this fight will unsettle the Arm ecosystem, and many licensees, including Qualcomm, will move aggressively to the competing RISC-V architecture. All of these will reduce Arm’s IPO valuation.

Absolute worst-case for everybody

The absolute worst case for the litigants and the industry will be Arm winning the case and the court agreeing to its request that Qualcomm destroys all its designs and products based on Nuvia IP. I think it is highly unlikely to happen. If at all, the court might order Qualcomm to pay punitive damages, but ordering to destroy years and billions of dollars of work, some of which consumers would already be using, seems excessive.

For the sake of argument, if all the designs were to be destroyed, Arm would have lost its biggest opportunity to expand its influence in the personal and cloud computing market. Among all its licensees, Qualcomm is the strongest and has the best chance to succeed in those markets. For Arm, this would be akin to winning the battle but losing the war. I think Arm is smart enough to let that happen.

Similarly, such destruction would be bad for Qualcomm as well. It would have lost all the time and money invested in buying Nuvia and developing products based on its IP. That will also sink its chances of disrupting personal and possibly cloud computing markets. Again, just like Arm, Qualcomm is smart enough to let that happen.

Losing such an opportunity to disrupt large markets such as personal and cloud computing would also be a major loss for the tech industry. This will also make any of Arm’s licensees almost impossible to acquire and the whole Arm ecosystem jittery. That will be a significant hurdle in the ecosystem’s otherwise smooth and expansive proliferation.

Hence, my money is on settlement. The only questions are when and on what terms.

Meanwhile, If you want to read more articles like this and get an up-to-date analysis of the latest mobile and tech industry news, sign-up for our monthly newsletter at TantraAnalyst.com/Newsletter, or listen to our Tantra’s Mantra podcast.

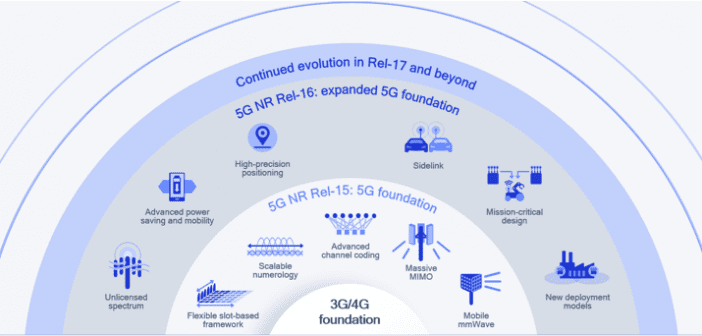

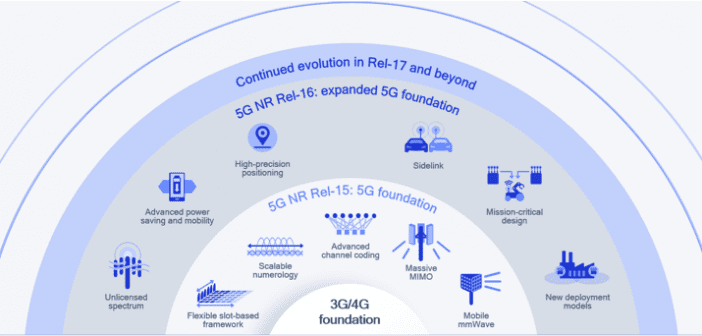

The Chronicles of 3GPP Rel. 17

Have you ever felt the joy and elation of being part of something that you have only been observing, reading, writing about, and admiring for a long time? Well, I experienced that when I became a member of 3GPP (3rd Generation Partnership Project) and attended RAN (Radio Access Network) plenary meeting #84 last week in the beautiful city of Newport Beach, California. RAN group is primarily responsible for coming up with wireless or radio interface related specifications.

The timing couldn’t be more perfect. This specific meeting was, in fact, the kick-off of 3GPP Rel. 17 discussions. I have written extensively about 3GPP and its processes on RCR Wireless News. You can read all of them here. Attending the first-ever meeting on a new release was indeed very exciting. I will chronicle the journey of Rel. 17, through a series of articles here on RCR Wireless News, and this is the first one. I will report the developments and discuss what those mean for the wireless as well as the many other industries 5G is set to touch and transform. If you are a standards and wireless junkie, get on board, and enjoy the ride.

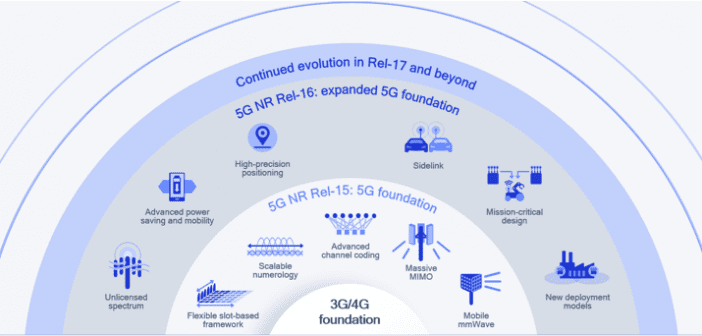

3GPP Rel. 17 is coming at an interesting time. It is coming after the much publicized and accelerated Rel. 15 that introduced 5G, and Rel. 16 that put a solid foundation for taking 5G beyond mobile broadband. Naturally, the interest is what more 5G could do. The Rel. 17 kick-off meeting, as expected, was a symposium of great ideas, and a long wish list from prominent 3GPP members. Although many of the members submitted their proposals, only a few, selected through a lottery system, got the opportunity to present in the meeting. Nokia, KPN, Qualcomm, Indian SSO (Standard Setting Organization), and few others were among the ones who presented. I saw two clear themes in most of the proposals: First, keeping enough of 3GPP’s time and resources free to address urgent needs stemming from the nascent 5G deployments; second, addressing the needs of new verticals/industries that 5G enables.

Rel. 17 work areas

There were a lot of common subjects in the proposals. All of those were consolidated into four main work areas during the meeting:

-

Topics for which the discussion can start in June 2019

-

The main topics in this group include mid-tier devices such as wearables without extreme speeds or latency, small data exchange during the inactive state, D2D enhancements going beyond V2X for relay-kind of deployments, support for mmWave above 52.6 GHz, Multi-SIM, multicast/broadcast enhancements, and coverage improvements

-

-

Topics for which the discussion can start in September 2019

-

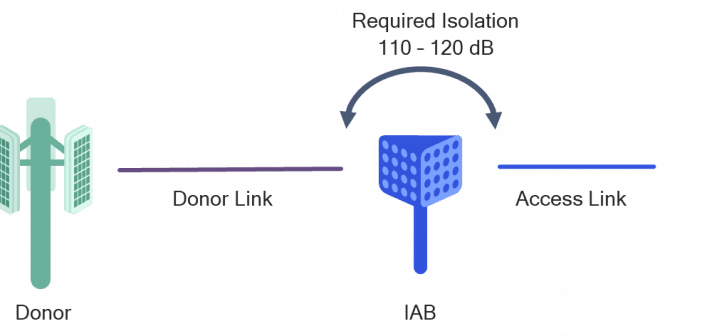

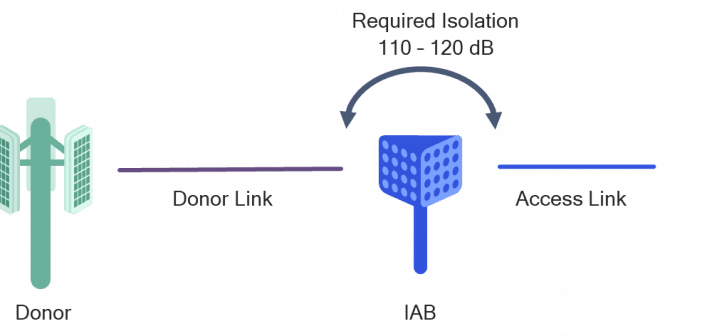

These include Integrated Access Backhaul (IAB), unlicensed spectrum support and power-saving enhancements, eMTC/NB-IoT in NR improvements, data collection for SON and AI considerations, high accuracy, and 3D positioning, etc.

-

-

Topics that have a broad agreement that can be directly proposed as Work Items or Study Items in future meetings

-

1024 QAM and others

-

-

Topics that don’t have a wider interest or the ones proposed by single or fewer members

As many times emphasized by the chair, the objective of forming these work areas was only to facilitate discussions between the members to come to a common understanding of what is needed. The reason for dividing them into June and September timeframe was purely for logistical reasons. This doesn’t imply any priority between the two groups. Many of the September work areas would be enhancements to items being still being worked on in Rel. 16. Also, spacing them out better spreads the workload. Based on how the discussions pan out, the work areas could be candidates for Work Items or Study Items in the December 2018 plenary meeting.

Two specific topics caught my attention. First, making 5G even more suitable for XR (AR, VR, etc.) and second, AI. The first one makes perfect sense, as XR evolution will have even stringent latency requirements and will need distributed processing capability between device and edge-cloud etc. However, I am not so sure about AI. I don’t how much scope there is to standardize AI, as it doesn’t necessarily require interoperability between devices of different vendors. Also, I doubt companies would be interested in standardizing AI algorithms, which minimizes their competitive edge.

Apart from technical discussions, there were questions and concerns regarding following US Government order to ban Huawei. This was the first major RAN plenary meeting after the executive order imposing the ban was issued. From the discussions, it seemed like “business as usual.” We will know the real effects when the detailed discussions start in the coming weeks.

On a closing note, many compare the standardization process to watching a glacier move. On the contrary, I found it to be very interesting and amusing, especially how the consensus process among the competitors and collaborates work. The meeting was always lively, with a lot of arguments and counter-arguments. We will see whether my view changes in the future! So, tune in to updates from future Rel. 17 meetings to hear about the progress.

I just returned from a whirlwind session of 3GPP RAN Plenary #86, held at the beautiful beach town of Sitges in Spain. The meeting finalized a comprehensive package with more than 30 Study and Work Items (SI and WI) for Rel 17. With a mix of new capabilities and significant improvements to existing features, Rel 17 is set to define the future of 5G. It is expected to be completed by mid or end of 2021.

<<Side note, if you would like to understand more about how 3GPP works, read my series “Demystifying Cellular Standards and Licensing” >>

Although the package looks like a laundry list of features, it gives a window into the strategy and capabilities of different member companies. Some are keen on investing in new, path-breaking technologies, while others are looking to optimize existing features or working on the fringe or very specific areas.

The Rel. 17 SI and WIs can be divided into three main categories.

Blazing new trail

These are the most important new concepts being introduced in Rel. 17 that promise to expand 5G’s horizon.

XR (SI) – The objective of this is to evaluate and adopt improvements that make 5G even better suited for AR, VR, and MR. It includes evaluating distributed architecture harnessing the power of edge-cloud and device capabilities to optimize latency, processing, and power. Lead (aka Rapporteur) – Qualcomm

NR up to 71 GHz (SI and WI) – This is in the new section because of a twist. The WI is to extend the current NR waveform up to 71 GHz, and SI is to explore new and more efficient waveforms for the 52.6 – 71 GHz band. Lead – Qualcomm and Intel

NR-Light (SI) – The objective is to develop cost-effective devices with capabilities that lie between the full-featured NR and Low Power Wireless Access (e.g., NB-IoT/eMTC). For example, devices that support 10s or 100 Mbps speed vs. multi-Gigabit, etc. The typical use cases are wearables, Industrial IoT (IIoT), and others. Lead – Ericsson

Non-Terrestrial Network (NTN) support for NR & NB-IoT/eMTC (WI) – A typical NTN is the satellite network. The objective is to address verticals such as Mining and Agriculture, which mostly lie in remote areas, as well as to enable global asset management, transcending contents and oceans. Lead – MediaTek and Eutelsat

Perfecting the concepts introduced in Rel. 16

Rel. 16 was a short release with an aggressive schedule. It improved upon Rel. 15 and brought in some new concepts. Rel 17 is aiming to make those new concepts well rounded.

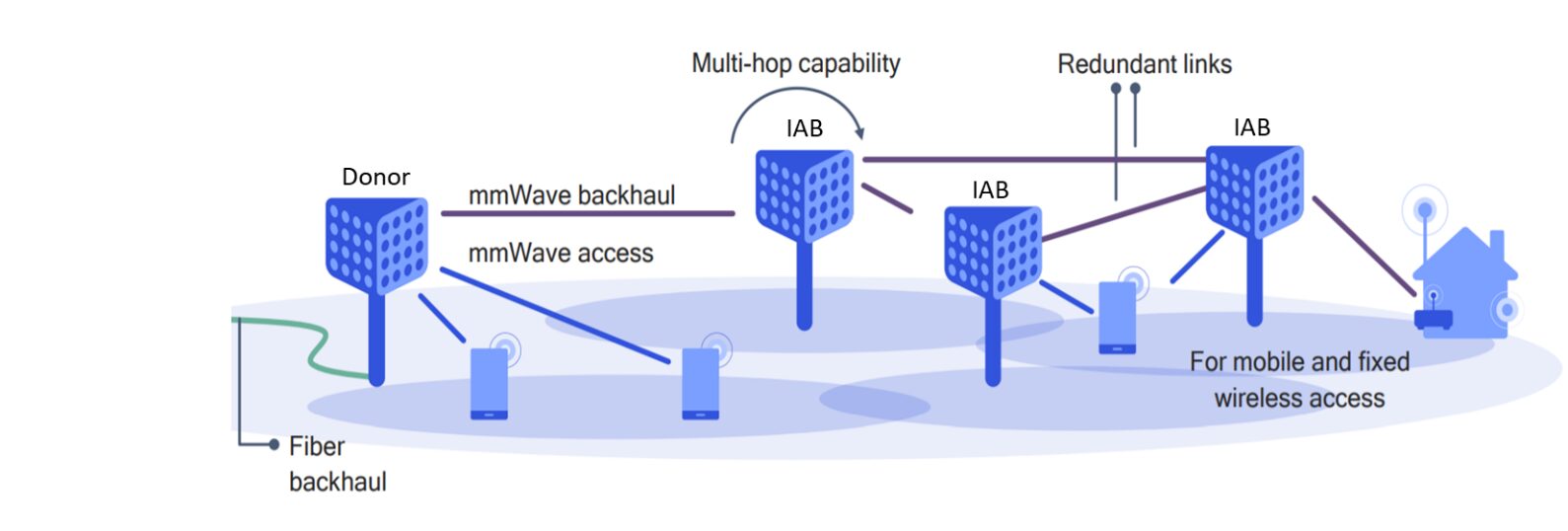

Integrated Access & Backhaul – IAB (WI) – Enable cost-effective and efficient deployment of 5G by using wireless for both access and backhaul, for example, using relatively low-cost and readily available millimeter wave (mmWave) spectrum in IAB mode for rapid 5G deployment. Such an approach is especially useful in regions where fiber is not feasible (hilly areas, emerging markets). Lead – Qualcomm

Positioning (SI) – Achieve centimeter-level accuracy, based only on cellular connectivity, especially indoors. This is a key feature for wearables, IIoT, and Industry 4.0 applications. Lead – CATT (NYU)

Sidelink (WI) – Expand use cases from V2X-only to public safety, emergency services, and other handset-based applications by reducing power consumption, reliability, and latency. Lead – LG

Small data transmission in “Inactive” mode (WI) – Enable such transmission without going through the full connection set-up to minimize power consumption. This is extremely important for IIoT use cases such as sensor updates, also for smartphone chatting apps such as Whatsapp, QQ, and others. Lead – ZTE

IIoT and URLLC (WI) – Evaluate and adopt any changes that might be needed to use the unlicensed spectrum for these applications and use cases. Lead – Nokia

Fine-tuning the performance of basic features introduced in Rel. 15

Rel. 15 introduced 5G. Its primary focus was enabling enhanced Broadband (eMBB). Rel. 16 enhanced many of eMBB features, and Rel. 17 is now trying to optimize them even further, especially based on the learnings from the early 5G deployments.

Further enhanced MIMO – FeMIMO (WI) – This improves the management of beamforming and beamsteering and reduces associated overheads. Lead – Samsung

Multi-Radio Dual Connectivity – MRDC (WI) – Mechanism to quickly deactivate unneeded radio when user traffic goes down, to save power. Lead – Huawei

Dynamic Spectrum Sharing – DSS (WI) – DSS had a major upgrade in Rel 16. Rel 17 is looking to facilitate better cross-carrier scheduling of 5G devices to provide enough capacity when their penetration increases. Lead – LG

Coverage Extension (SI) – Since many of the spectrum bands used for 5G will be higher than 4G (even in Sub 6 GHz), this will look into the possibility of extending the coverage of 5G to balance the difference between the two. Lead – China Telecom and Samsung

Along with these, there were many other SI and WIs, including Multi-SIM, RAN Slicing, Self Organizing Networks, QoE Enhancements, NR-Multicast/Broadcast, UE power saving, etc., was adopted into Rel. 17.

Other highlights of the plenary

Unlike previous meetings, there were more delegates from non-cellular companies this time, and they were very actively participating in the discussions, as well. For example, a representative from Bosch was a passionate proponent for automotive needs in Slidelink enhancements. I have discussed with people who facilitate the discussion between 3GPP and the industry body 5G Automotive Association (5GAA). This is an extremely welcome development, considering that 5G will transform these industries. Incorporating their needs at the grassroots level during the standards definition phase allows the ecosystem to build solutions that are market-ready for rapid deployment.

There was a rare, very contentious debate in a joint session between RAN and SA groups. The debate was to whether set RAN SI and WI completion timeline to 15 months, as planned now, or extend it to 18 months. The reason for the latter is TSG-SA being late with Rel. 16 completion, and consequently lagging in Rel. 17. Setting an 18-month completion target for RAN will allow SA to catch up and align both the groups to finish Rel. 17 simultaneously. However, RAN, which runs a tight ship, is not happy with the delay. Even after a lengthy discussion, the issue remains unresolved.

<<Side Note: If you would like to know the organization of different 3GPP groups, including TSGs, check out my previous article “Who are the unsung heroes that create the standards?” >>

It will be amiss if I don’t mention the excellent project management skills exhibited by the RAN chair Mr. Balazs Bertenyi of Nokia Bell Labs. Without his firm, yet logical and unbiased decision making, it would have been impossible to finalize all these things in a short span of four days.

In closing

Rel. 17 is a major release in the evolution of 5G that will expand its reach and scope. It will 1) enable new capabilities for applications such as XR; 2) create new categories of devices with NR-Light; 3) bring 5G to new realms such as satellites; 4) make possible the Massive IoT and Mission Critical Services vision set out at the beginning of 5G; while also improving the excellent start 5G has gotten with Rel. 15 and eMBB. I, for one, feel fortunate to be a witness to see it transform from concept to completion.

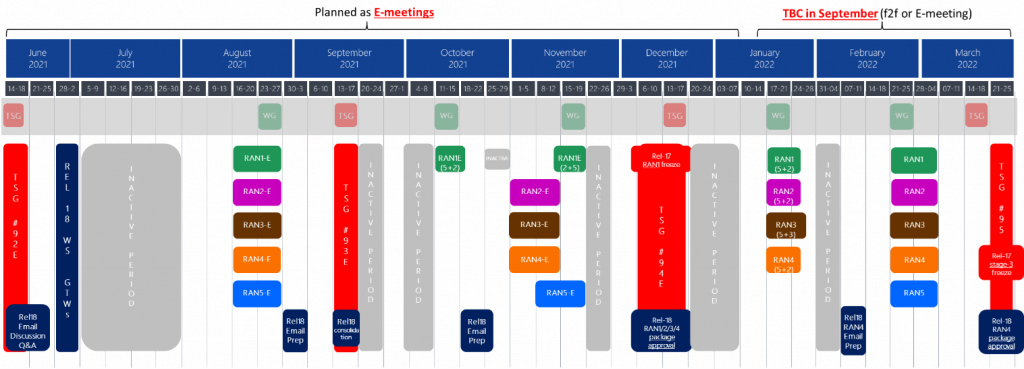

With COVID-19 novel coronavirus creating havoc and upsetting everybody’s plans, the question on the minds of many people that follow standards development is, “How will it affect the 5G evolution timeline?” The question is even more relevant for Rel. 16, which is expected to be finalized by Jun 2020. I talked at length regarding this with two key leaders of the industry body 3GPP—Mr. Balazs Bertenyi, the Chair of RAN TSG and Mr. Wanshi Chen, Chair of RAN1 Working Group (WG). The message from both was that Rel 16 will be delivered on time. The Rel. 17 timelines were a different story though.

<<Side note: If you would like to know more about 3GPP TSGs and WGs, refer to my article series “Demystifying Cellular Patents and Licensing.” >>

3GPP meetings are spread throughout the year. Many of them are large conference-style gatherings involving hundreds of delegates from across the world. WG meetings happen almost monthly, whereas TSG meetings are held quarterly. The meetings are usually distributed among major member countries, including the US, Europe, Japan, and China. In the first half of the year, there were WG meetings scheduled in Greece in February, and Korea, Japan, and Canada in April, as well as TSG meetings in Jeju, South Korea in March. But because of the virus outbreak, all those face-to-face meetings were canceled and replaced with online meetings and conference calls. As it stands now, the next face-to-face meetings will take place in May, subject to the developments of the virus situation.

Since 3GPP runs on consensus, the lack of face-to-face meetings certainly raises concerns about the progress that can be made as well as its possible effect on the timelines. However, the duo of Mr. Bertenyi and Mr. Wanshi are working diligently to keep the well-oiled standardization machine going. Mr. Bertenyi told me that although face-to-face meetings are the best and the most efficient option, 3GPP is making elaborate arrangements to replace them with virtual means. They have adopted a two-step approach:1) Further expand the ongoing email-based discussions; 2) Multiple simultaneous conference calls mimicking the actual meetings. “We have worked with the delegates from all participant countries to come up with a few convenient four-hour time slots, and will run simultaneous on-line meetings/conference calls and collaborative sessions to facilitate meaningful interaction,” said Bertenyi “We have stress-tested our systems to ensure its robustness to support a large number of participants“

Mr. Wanshi, who leads the largest working group RAN 1, says that they have already completed a substantial part of Rel 16 work and have achieved functional freeze. So, the focus is now on RAN 2 and RAN3 groups, which is in full swing. The current schedule is to achieve what is called ASN.1 freeze in June 2020. This milestone establishes a stable specification-baseline from which vendors can start building commercial products.

Although, it’s reasonable to say that notwithstanding any further disturbances, Rel. 16 will be finalized on time. However, things are not certain for Rel. 17. Mr. Bertenyi stated that based on the meeting cancellations, it seems inevitable that the Rel. 17 completion timeline will shift by three months to September 2021.

It goes without saying that the plans are based on the current state of affairs in the outbreak. If the situation changes substantially, all the plans will go up in the air. I will keep monitoring the developments and report back. Please make sure to sign-up for our monthly newsletter at TantraAnalyst.com/Newsletter to get the latest on standardization and the telecom industry at large.